After reading this, you will be able to execute python files and jupyter notebooks that execute Apache Spark code in your local environment. This tutorial applies to OS X and Linux systems. Download burn for mac. We assume you already have knowledge on python and a console environment.

1. Download Apache Spark

- The system install of Python on macOS is not supported. Instead, an installation through Homebrew is recommended. To install Python using Homebrew on macOS use brew install python3 at the Terminal prompt. Note On macOS, make sure the location of your VS Code installation is included in your PATH environment variable.

- Python is not presently supported in Visual Studio for Mac, but is available on Mac and Linux through Visual Studio Code (see questions and answers).

- Python Tools for Visual Studio is a completely free extension, developed and supported by Microsoft with contributions from the community. Visit our Github page to see or participate in PTVS development.

We will download the latest version currently available at the time of writing this: 3.0.1 from the official website.

Download it and extract it in your computer. The path I'll be using for this tutorial is /Users/myuser/bigdata/spark This folder will contain all the files, like this

Now, I will edit the .bashrc file, located in the home of your user

Then we will update our environment variables so we can execute spark programs and our python environments will be able to locate the spark libraries.

Save the file and load the changes executing $ source ~/.bashrc. Pic collage for mac. If this worked, you will be able to open an spark shell.

Setup Python Development Environment on Windows. To run Python code on Windows, we need to install Python. We can install Python by 1. Microsoft Store, or 2. Python official website. Follow the instructions to install it. The latest stable version is 3.9 when this article is written. Create a Virtual Environment.

We are now done installing Spark.

2. Install Visual Studio Code

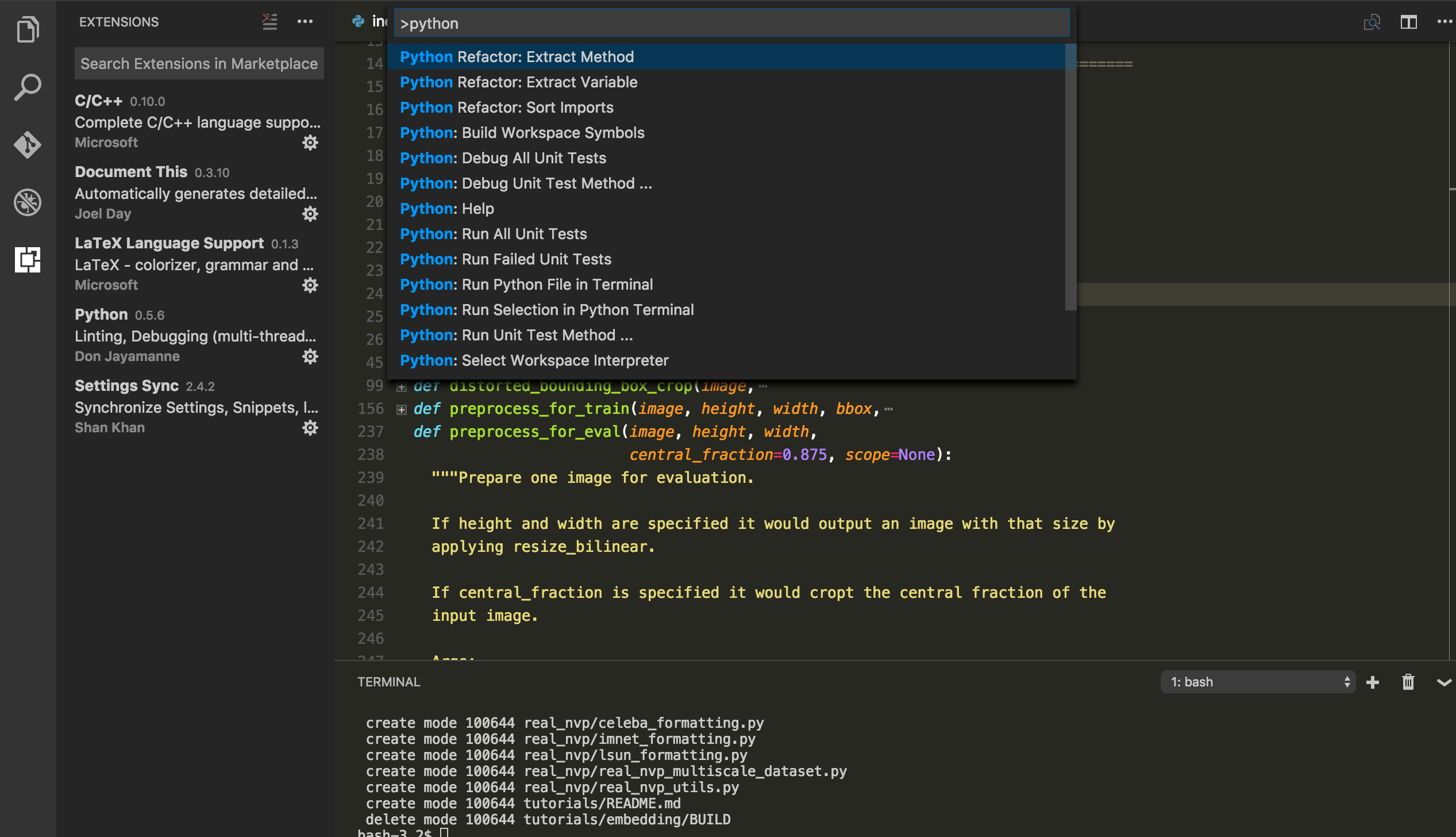

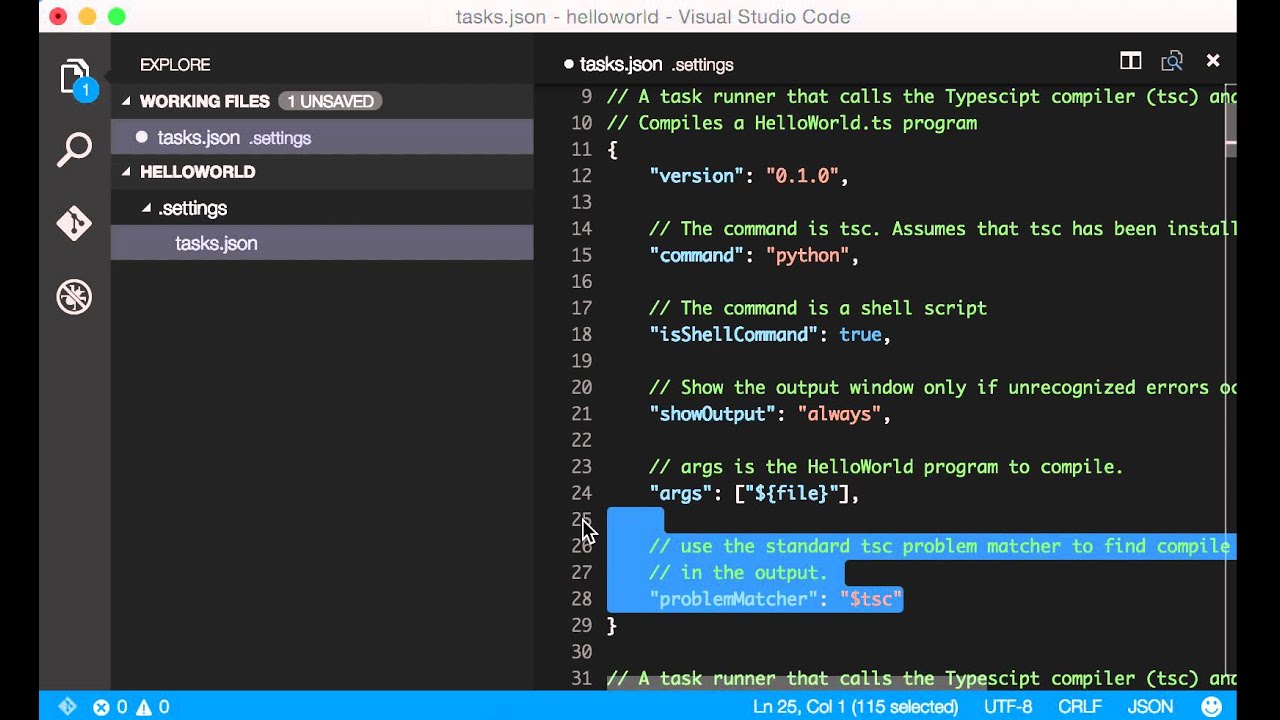

One of the good things of this IDE is that allows us to run Jupyter notebooks within itself. Follow the Set-up instructions and then install python and the VSCode Python extension.

Then, open a new terminal and install the pyspark package via pip $ pip install pyspark. Note: depending on your installation, the command changes to pip3.

3. Run your pyspark code

Create a new file or notebook in VS Code and you should be able to execute and get some results using the Pi example provided by the library itself.

Troubleshoot

If you are in a distribution that by default installs python3 (e.g. What is basecamp for mac. Ubuntu 20.04), pyspark will mostly fail with a message error like pysparkenv: 'python': No such file or directory.

The first option to fix it is to add to your .profile or .bashrc files the following content

Remember to always reload the configuration via source .bashrc

In this case, the solution worked if I executed pyspark from the command line but not from VSCode's notebook. Since I am using a distribution based on debian, installing tehe following package fixed it:

Python Visual Studio Download

Visual Studio For Mac Tutorial

sudo apt-get install python-is-python3